Security is love

Figure 1: "Power without love is reckless and abusive, and love without power is sentimental and anemic. Power at its best is love implementing the demands of justice, and justice at its best is power correcting everything that stands against love. – Martin Luther King Jr."

Avast! Spoils and Plunder! !!!"

Let's start with a story of real piracy. Formed in 1988 the Avast company is probably known to you for it's anti-virus products. It began as a non-profit foundation for community development. Today it's a global cyber-security empire worth $8 billion, employing almost 2000 staff in 25 offices around the world. Many people place a lots of trust in their security products which include anti-virus, firewalls, and password management. It has 435 million monthly subscribers.

However, Avast anti-virus and browser extensions were found to collect user data and store it indefinitely without the proper knowledge of customers. Not just a little bit. Not just visited sites. But very detailed information from hundreds of millions of users over ten years - "more than eight petabytes of browsing information dating back to 2014". This sensitive data, which can be deanonymised and therefore identifiable against each individual user includes videos watched, search queries, religious beliefs, health concerns, political views, locations, and financial status. It was then resold to over 100 companies for targeted advertising through a subsidiary company. On January 30, 2020 Avast announced that it would cease reselling personal data when the FTC found it guilty and issued a $16.5 million fine.

That's obviously a massive betrayal. But why single out Avast? No reason in particular, they were just the company on the front page the day I wrote this article. And it seemed like a good place to start the story.

Anyone whose been paying attention to technology news this past decade knows such criminality is rife. Despite governments issuing companies millions, even billions in fines, they shrug it off as the cost of doing business. Big tech companies spy on you, violate your privacy, steal and sell your data with impunity.

In fact it's become the standard business model in tech and follows a pattern of building trust, and then burning it to "pivot" or cash-out. Enshitification is this elevation and then degeneration of digital platforms. According to the Financial Times it is a problem endemic to every commercial online service.

Personally, I somewhat prefer cryptogram commenter Clive Robinson's take on what is a timeless craft of;

Bedazzle, Beguile, Bewitch, Befriend, and Betray

More concretely in cybersecurity the ruse is;

- Get people to trust in a product by scaremongering, false promises or threats.

- Get people to depend on the product, through network effects, lock-in, social pressure, or even buying regulatory mandates.

- And finally, use that dependency to betray customers, such as selling the company to an unethical acquirer, breaching your privacy or compromising your assets.

Trust and trustworthiness

It might help to look at some concepts in play. Let's start with trust. In Ross Anderson's Security Engineering book, we can find a good distinction of trust from trustworthiness.

If something is trusted that may be a good or a bad thing. A rock climber trusts a rope, or in other words depends upon it. If you drive to work each day you trust your car. If it breaks down you can't work. You might lose money or even your job. A whole series of things can depend on something trusted. It is the proverbial link in a chain.

However, if your car has never broken down in 20 years you might claim it is trustworthy. You have some evidence to believe it may continue to be worthy of your dependence on it.

As with science, the evidence necessary for trustworthiness must be gathered, not given by "assurances". The required conditions are choice, access, legibility, understanding and verification. Let's examine these.

Choice

Trust in something is not always a choice. In situations beyond your control, like flying in an unsafe airliner, you are essentially forced to trust the engineering skills of others.

Always ask yourself, "Do I have a choice?". If you are ever told "There is no alternative" or feel under pressure to trust some prescribed security, there's a very good chance you're being conned or manipulated. You probably feel you have little choice to but to trust your online banking arrangements. Sadly however, these banks are themselves not trustworthy, and that is why, like Ross Anderson, I don't do online banking and never will.

Many security products, such as those from Microsoft, appeal to people as "making security easy", whereas what they are really doing is limiting practical choice.

Access and legibility

You cannot trust something you cannot see. If I tell you that I have a written contract in my filing cabinet that will pay you the salary you like to do any job or travel any place, it inspires no confidence if I tell you it will only work if you can never see it. This is what we call "proprietary software".

Understanding

If I get out the contract to show you, and read it, it's no use to you if it's in Ancient Aramaic. How is a witch supposed to trust the word of the witch-finder before she is burned, if the judgement is read out in Latin?

Verification

And assuming the contract is in legible, understandable English, how do you prove its worth? What concrete and demonstrable assurances does it come with? The problem in security is that nobody can disprove a negative, as the "lucky rock that keeps away tigers" demonstrates. If the only disproof you can have involves the catastrophic loss of your business, it's not much use.

Digital value

Let's say we put our trust in something. Is that trust deserved if every week the product becomes outdated and needs replacing or (as the trick goes in tech… "updating")? Of course we are used to consumable products like milk, or light-bulbs. However, we understand why these products need replenishing. Does that work for cybersecurity?

What are we now trusting? The product, or the supplier's honesty? Can we trust online updates of computer products if companies can use them to sabotage our property for their own profit?

This is somewhat related to solutionism, the dangerous ideology of Silicon Valley criticised by Evgeny Morozov as the spirit of digital capitalism, whereby we solve technologically created problems by adding ever more technology, that in turn creates other new problems in the process…

So far we've only considered that the solutions fail to solve our needs. There is a next stage of rotten technology. Something is iatrogenic when it sets out fix something but in the process makes it worse.

An example of a technology that makes things worse is digital key fobs for cars. Auto-theft has gone up enormously since it became quite easy to steal cars due to poor electronic security. Despite people generally disliking this technology and preferring old-style metal keys, manufacturers continue to push it onto drivers who now revive the use of old-fashioned steering locks to stop cars with the latest technology from being stolen.

Another technology that simply begs us to suffer more problems than it can solve is infrastructure-level system management tools, centrally managed supply chains and indeed things like password managers. Any tools that, "for convenience" put all our eggs into one basket, are going to fail catastrophically at some point because they work against the principles of risk distribution and hybrid/diversity vigour. They make class break scale attacks more likely. In 2020 compromise of the Solarwinds Orion system left 30,000 public and private organisations wide open to backdoors and malware, and allowed hundreds of thousands more systems connected to them to be infiltrated.

But there is something still worse. The Shirky principle is the adage that "institutions will try to preserve the problem to which they are the solution". This maxim is almost a gold-standard in systems theory, with variations in Pournelle's Iron Law of Bureaucracy, Gall's Systemantics, Meadow's leverage points and Jane Jacobs work on urban complexity. It also relates to that Upton Sinclair quip that "It is difficult to get a man to understand something, when his salary depends on his not understanding it".

In a nutshell, this is the "Security Industry". Or perhaps more appropriately the Insecurity Industry which is starting to look more and more like a protection racket as a handful of monopoly mobs duke it out for rights to capture the rest of us as chattel in their walled gardens. The problem is that it's a business and set of products with no real incentive to actually protect customers.

The psychological allegory we give this is factitious disorder, also known as Munchausen Syndrome by Proxy. It's where an ostensible caregiver keeps a patient sick in order to continue the caring relation for their own benefit.

Never before have there been industries so perfectly placed to create the problems for which they sell the solutions. And never before have we strayed so far from anything resembling "free markets" but still under the illusion that we live in demand-driven free-market capitalist economy.

Are we being cynical here? Surely nobody would create security problems simply to make money by selling the solutions? Doesn't this violate the broken window fallacy? To understand more let's move on to our next factor; psychopathy…

Psychopaths in Tech

None of this might happen if computer technology was run by nice people. It is maybe a somewhat overplayed trope that business leaders are disproportionately psychopathic. But one area of enormous concern remains around psychological attitudes in tech. Here, controlling behaviours, arrogant down-talking, manpsplaining, cult-like assumptions of normativity and ugly domineering behaviours are rife.

Maelle Gavet's Trampled by Unicorns is a catalogue of technology companies staffed by people lacking human empathy and worldly understanding, who lack self-regulation and massively overvalue immediate rewards while avoiding complex, humane or long-term thinking.

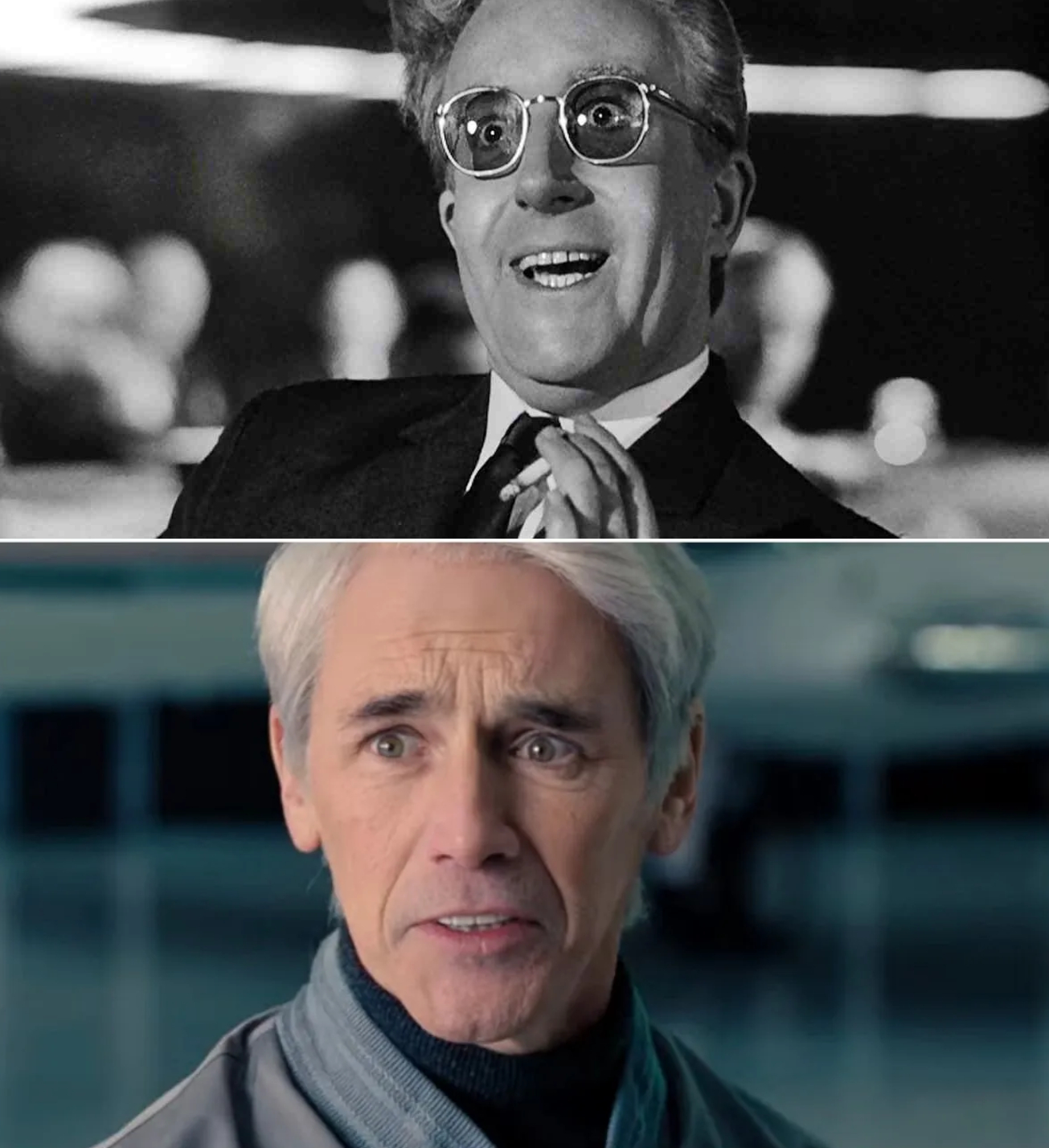

The characters of Peter Isherwell (played by Mark Rylance) in Adam McKay's "Don't Look Up" and "Dr. Strangelove" (Played by Peter Sellers) in Stanley Kubrick's eponymous film, epitomise the grandiose exceptionalism of the "tech-bro" narcissistic psychopath - who just wants to make the world a "better place" (for profit).

These seem like exactly the wrong people to be within a clear country mile of securing other people's digital lives. Indeed, we all know exactly what Mark Zuckerberg thinks of his Facebook users.

Figure 2: "Tech megalomaniacs"

The thing is, to offer anything that even looks like security one needs to care, to plan, to think ahead and hold what one cares for in mind as a mother offers security to her child. Psychologically this model of security is clustered somewhere close to the common conception of love. It may be contrasted with protection as a provision, which feels like a more masculine flavour of security. Whether or not security can be thought of as "gendered", this still reveals a deep and fundamentally different concepts of security.

But this is nothing new. In the 1960s Joseph Weizenbaum, creator of the original AI chatbot, wrote much about the ethics and indeed the human purpose of coding, and the misuse of digital power. His Computer Power and Human Reason remains a classic paper. His work is still analysed today, and is highly recommended for anyone worrying about AI.

Unfortunately, modern corporate software is a train-wreck of hastily thrown together cruft, built with the purpose of extracting money. It's pushed by smartly dressed, confident sounding apologists who speak nonsense and lies about reliability, security and ethics. Google famously fired two consecutive chief ethics officers, and the role, while grand in name, is generally considered untenable in any large technology company.

Computers change us

Computers seem to do something to certain people who get power and profit from them. Like firearms or cars, perfectly normal people like your mother or neighbour can become monsters in the presence of technology. Action at a distance, diffusion of responsibility, and quasi-religious idolatry are, according to Andrew Kimbrall, a gateway to Cold Evil, where latent malevolent, authoritarian and aggressive traits are unleashed. To some kinds of people, all technology regardless its ostensible use as a "tool" becomes a weapon

Fifty years after Gerald Weinberg gave us egoless programming, tech execs are unable to separate their puff, bluster, pride, and brand image from common-sense practical engineering. Whether it's selling personal information, installing spyware, or deliberately breaking people's devices they seem to have almost no ability to shield their customers security from their own greed.

So, thinking rationally for a moment; for our security, would we naturally choose psychopaths wielding defective weapons?

The answer, bizarrely, is yes, but it has little to do with rationality.

Why we trust strong leaders

So how much of the dreadful security landscape is our own fault? "Strong leaders" invariably turn out to be bad choices. Sadly our brains seem wired to confuse strength with competence. Big Tech companies project themselves as powerful in order to command our fealty. When we are in doubt about cybersecurity we tend to turn to established brands as havens of safety. Let's use Diane Dreher's checklist from this Psychology Today piece to see why;

Authority

The word authority comes from the same root as "author", one who writes. But today we mix it up with power and popularity. Obedience and adoration are the greatest enemies of reason.

People defer to what they perceive to be an "authority", and given the choice of more than one authority they tend to select whichever appears the strongest of the two.

A key lever in Milgram's obedience experiments was the white coats and glasses of the "scientists". Tech companies present themselves not merely as pedlars of gadgets and gizmos, but as 'authorities' by wearing the garments of authority. They cultivate a mythology of being staffed by the greatest minds of our generation, everybody at Google or Facebook must be a genius… right?

Despite Facebook driving teens to suicide and selling our personal data, Google killing almost every product it's ever made, and Microsoft failing for 40 straight years to produce an operating system that isn't full of holes, people still "look up" to them. Brand symbols have enormous semiotic power. As soon as IT managers see the familiar coloured logos of Microsoft or Google their eyes glaze over and reason exits the building.

Limited Information.

Authoritarians seek to cut people off from valid information. You cannot question what you do not know about. Ignorance is strength (for the party).

Figure 3: "Ignorance is strength"

Free open source software, which respects the users choice and security, puts the peoples' right to examine and change source code at the centre of its philosophy. By contrast, proprietary corporate software hides itself from you in order to profit. It also, dishonestly claims that being opaque makes it more secure.

This goes back to our earlier criteria for trust. If you doubt something is secure, but you can examine it and see exactly how it works, you can verify any claims. By contrast, "Software as a Service" (SaaS) - often called "cloud solutions" - insists that you rely only the supplier's 'assurances' that it is well behaved.

Gradually Increasing Demands

This is the old boiling a frog trick. When has computer security ever gotten easier? Regular passwords turned into unmemorable random gibberish, then into two-, then three-factor authentication with biometrics and crazy time-wasting puzzles to "prove you are a human".

The digital world is ever expanding into weirder systems of fake customer service, elaborate month-long password recovery adventures, bizarre ritualistic sign-in workflows, chatbots, and helpdesk tickets.

The official narrative is that security got complex because attackers got more sophisticated. That's true, but is only part of the story. Security got complex because a massive security industry grew, rushing in to paper-over the faults of mostly awful software breathlessly written in three decades of reckless expansion.

Digital technology was forced onto society taking on functions far beyond people's capacity or desire to absorb it.

Today we will not protest when asked to turn around three times, touch our noses, recite the three digits of our Aunt's dog's IQ backwards. It's now impossible to know which aspects of security are real and which are a joke designed to waste time and humiliate us. Likewise we are increasingly less able to be sure whether people asking us "security questions" are legitimate or scammers. Funny as this may seem, absurdity and gaslighting are part of real psychological warfare tactics designed to discombobulate and sap morale.

Infuriatingly, big-tech companies are complicit in this. Their products are designed to suck you deeper and deeper into "engagement", giving up ever more personal information and autonomy to systems. CAPCHA barriers, which have wasted literally billions of human hours of labour, were designed to train AI image recognition, which can now trivially solve these "tests of humanity". The entire game is a bonfire of human value and goodwill.

Avoiding Personal Responsibility

Despite what we clearly established at Nuremberg the idea that "just following orders" evades personal responsibility for grave consequences has hardly touched the Western mind. Despite the fact that your institution or employer will likely throw you under a bus the moment any legal or financial harm needs dumping on someone, we continue to appeal to authority as cover for our ethical confusion.

Big companies are great places for the morally bewildered to seek cover, so this psychology still serves authoritarians well. Today we use terms like "best practice" and "policy" in place of military orders. Institutionalised workplaces actively discourage people from thinking and taking initiatives, particularly around common-sense moral judgements.

The Power of Fear

Dreher cites the work of Timothy Snyder on how authoritarian leaders use fear, saying "they produce a toxic mix of fear, polarisation, scapegoating, and chronic anxiety that can undermine a free society".

Computers are extraordinary tools for fomenting fear. Many of the laws around them, like computer misuse laws, are broken. Computer laws disproportionately punish ordinary users for minor deeds like copying a file, while holding billion dollar mega-corporations harmless for ruining lives and wrecking society.

Computers are also used by security auditors with low EI as tools for humiliation in internal phishing audits (Please, don't do this. Let us help you understand the psychology of getting security buy-in ).

This all creates an atmosphere of confusion and fear which allows not just scammers to leverage fear, but allows big tech corporations to terrify users with dishonest popups and alarming messages too. Fear of "missing out", having your account "cancelled", being "fined" or having money taken from you are used to trick people into things like Amazon sign-up.

Projection onto systems and totems

I think we should also distinguish our own desire to unload personal responsibility from the desire of authority figures to do the same. Seldom will you find a 'professional manager' with skin in the game, who is able to own their demands.

Again in Milgram's obedience experiments, the "scientists" used passive language to pressure their subjects. They never said "I demand that you continue". That would be too direct and invite resistance. Instead they say, "The experiment requires that you continue". This diffuses responsibility. Transcripts can be found in Yale archives.

The outcome of all these behaviours is iatrogenic, because in this way security regulation like the GDPR actually becomes a weapon in the hands of the abuser. The authoritarian administrator who wants to impose their "security" on victims has to merely invoke the spectre of nebulous "systems" and "policies".

Developmental model of trustworthiness

Let's start to bring some of these threads together.

We seem drawn to strong, powerful, psychopathic forces, especially if they indulge our vices, allow us to be lazy and hide the truth from ourselves. Strangely IT and computers embody this "technofascist" element for many ordinary people who are cowed by technology. Big Tech companies epitomise this attractive but abusive creed.

We are happy to pay them for the right to stay ignorant. They are happy to sell us junk that merely creates more problems for which they sell more junk solutions. Somehow, not only are we held by this feeding cycle, we feel contented within it. We're always just one fix away from the perfect system and from existential security. Readers of Orwell's Nineteen Eighty Four will recognise this ever shifting landscape immediately.

Readers of a different flavour of psychology may instead recognise a common attachment pattern. John Bowlby worked on the stages of human development from childhood into adulthood, including attachment, separation and individuation.

Figure 4: "John Bowlby"

To become an adult we have to take on board responsibilities. We learn to make conversation, to win arguments, find and keep a job, manage money, start friendships, cook, clean, exercise and look after our body and mind.

In the digital age that includes basic digital skills and literacy. But society has not yet caught up with recognising what those qualities of the "digital adult" are.

In developmental psychology we recognise the horrible effects of violent, abusive and treacherous caregivers. We see how overbearing, suffocating mothers, demanding fathers and helicopter parents block maturation and cultivate dependency. Instead of growing up, we get adults in stages of arrested development with avoidant or disorganised ways of seeing the world.

People in this state make easy victims for predators who, like drug dealers, develop factitious (Munchausen by proxy) relations with them. They are kept in a perpetual state of insecurity, forever beholden to the suppliers of "security". Unlike a social contract or parental relationship, there is nothing to gain from this arrangement for the party that is held down, and no hope of exit from it.

Boudica's Model

We are going to very quickly revisit "trustworthiness" and highlight one condition.

Trustworthiness includes the freedom to join or leave.

This should feel natural, as all of the other conditions we saw earlier have corresponding ideas in contract law, understanding, legibility, consideration. Very importantly in contract law we have entry and exit conditions, like capacity and termination.

Put more simply, nobody can be compelled to trust.

Unfortunately, as it is configured today cybersecurity is blighted by a number of serious principle agent problems. For the average person computer security revolves around a handful of near-mandatory monopoly providers who create the problems, the solutions and increasingly the rules of the game.

These "principle agent" problems seem so out of control that despite the valiant efforts of the European Union many security thinkers now feel that nation state legislatures have given up.

Wherever we live, as computer users, whether on desktops or smartphones, we feel ever more as if in the presence of an occupying empire. This is why we believe in and promote Civic Cybersecurity.

Building an alternative

Amidst such a depressing and galling problem it became necessary to set up a different kind of cybersecurity company, and a different kind of cybersecurity education.

At Boudica we don't train you in how to secure your Google phone, Microsoft desktop or Amazon cloud against attackers. For us, Big Tech companies are the cybersecurity problem.

Many of the courses we run start with a detailed systems analysis and review of your attack surface, with one clear goal in mind - getting you free from Big Tech risks and into a state of manageable independence. It is "cybersecurity for grown-ups".

One of the exercises involves finding places where you "feel you have no choice", get stuck in patterns of mistakes and use something risky simply because it feels good, or is needed to inter-operate with a particular client or process.

Unsurprisingly, Boudica programmes are rooted as much in psychology as they are in mathematics, code and protocols.

Some radical security philosophies

We're not embarrassed to say that real security is like genuine love. It seeks not to control, make dependent or hold the other in debt. Instead the idea is to impart understanding, agency and empowerment. It is the proverbial "teaching a man to fish" or, as was once the aspiration of higher education, for students to "learn how to learn".

It would be easy to sell you something that makes you keep coming back. We're most happy when we never see our customers again, because you went off and got on with life building your business or just feeling safe in the digital world.

At Boudica our model of security is founded on understanding, autonomy, radical scepticism, focus, minimalism, and elegance. It is more about what you do not do than what you do.

In any secure relationship the other is always an equal partner. We try to teach that principle not as a glib touchy-feely aside, but as a foundational principle of system design and technology. Obviously love that is forced is never for the benefit of its object. At best it is clumsy and pitiful, at worst it is harassment and rape.

Security that is forced on people is no security at all. At best it is a simple commercial extraction, at worse it is a criminal protection racket. As reasons go, "for your own good" is never an acceptable reason. Security is always aligned with freedom. That includes "Ensler-type" security - freedom from other people's imposition of security.

Figure 5: Eve "V" Ensler: Insecure at Last

Today, millions of companies suffer extreme inefficiency because security is a burden on them. Ideas like "boss-ware" to spy on homeworkers, and surprise internal phishing raids, hurt everyone and cost a fortune in anxiety, suspicion, loss of goodwill and time spent circumventing and gaming systems. Bad security, which gets off on the wrong foot, is worse than no security at all, because the illusion of safety makes us all more vulnerable.

Perhaps the lesson to learn from Avast is not that they're a bad company who betrayed their users, but that relying on "security products" of any kind is a mistake.

Indeed, turning to big technology companies for security is a terrible mistake. They are blind profit machines essentially incapable of love and care. If all they have to offer is security without love, all they have to offer is a kind of tyranny.

Real security is a design stance, a mindset, a way of thinking and being that stops you from getting mixed up with substandard technology in the first place.

Much cybersecurity education and training is funded by sellers of security products and connected with big business. All are ultimately conflicted in their loyalty to their customers.

If you're interested in pioneering psychological approaches to cybersecurity come and visit us at Boudica. We teach principles not products, empowering knowledge and ideas that will last a lifetime and go with you wherever your career turns.

Acknowledgements

Thanks to Dr. Kate Brown for the jam hot attachment takes.